We are hearing more and more about sustainable AI computation and FuriosaAI has a solution for that with RNGD. This is almost the opposite of many AI compute platforms we have heard about today. Instead of going for maximum performance, this is a lower power compute solution.

This is the last talk of the day after over a dozen, and it will be conducted live, so please excuse any typos.

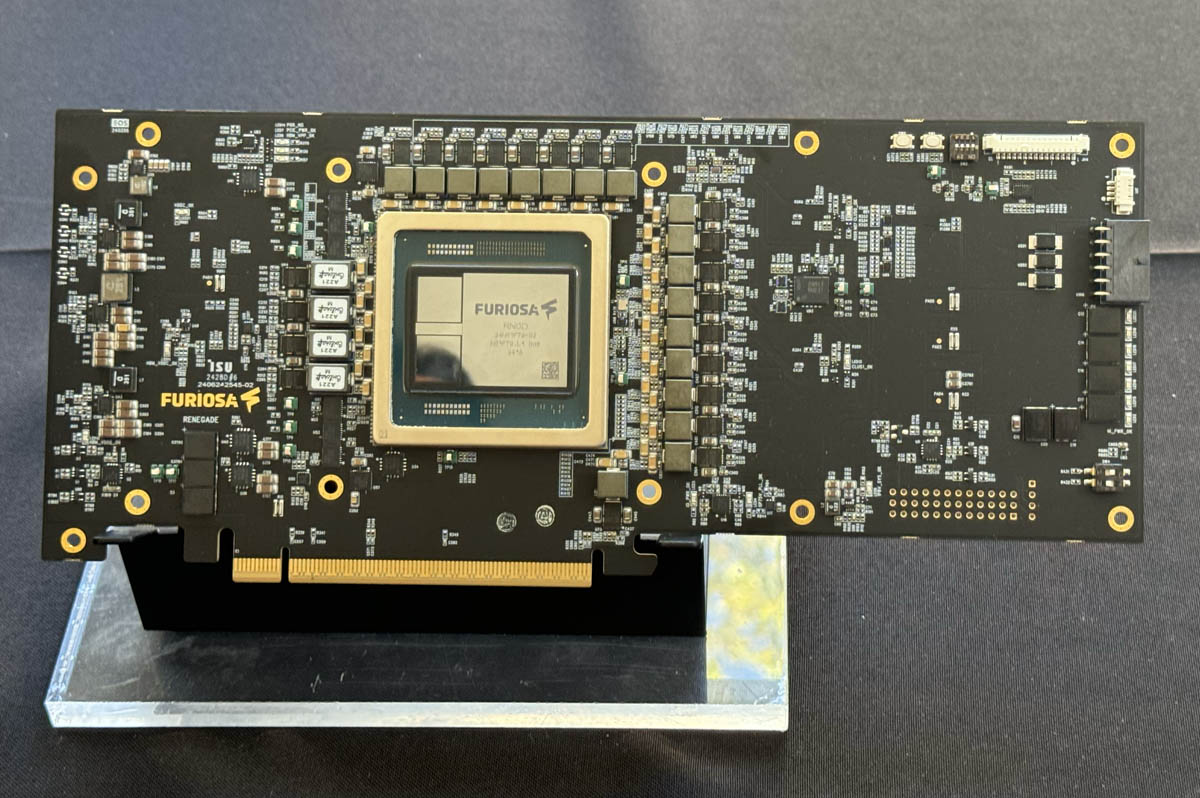

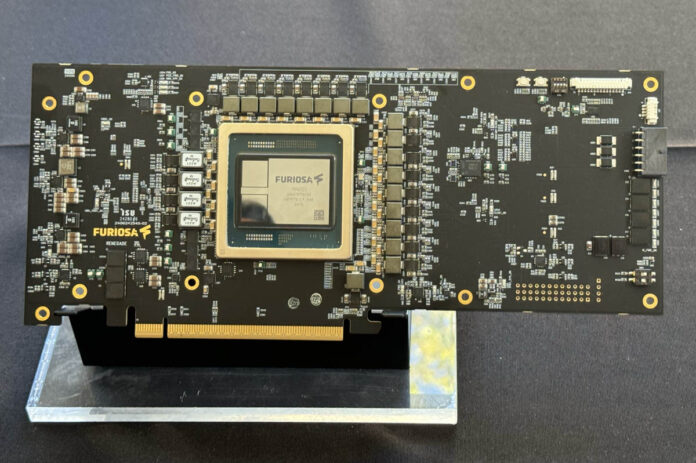

FuriosaAI RNGD processor for sustainable AI computing

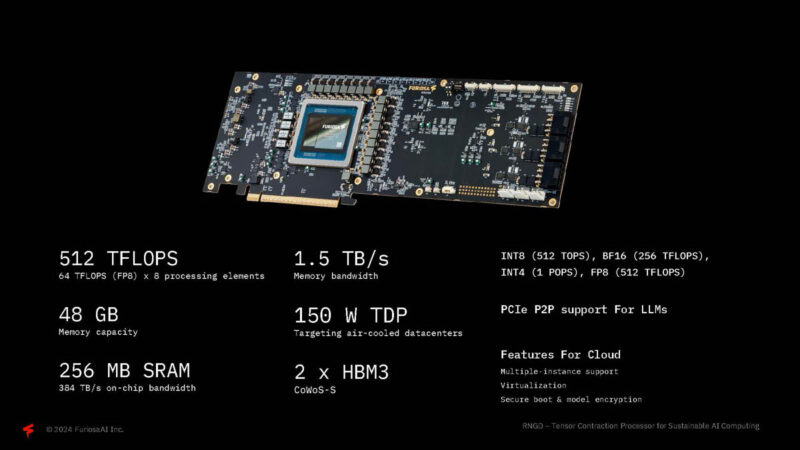

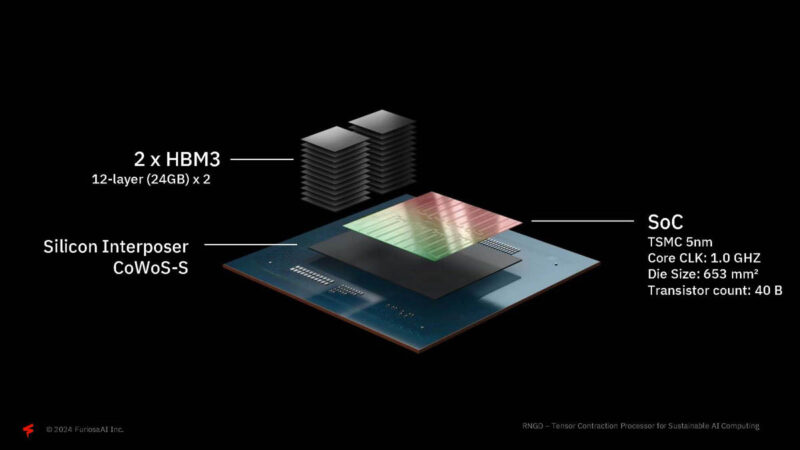

Here are the specs of the card. It is not specifically designed to be the fastest AI chip on the market.

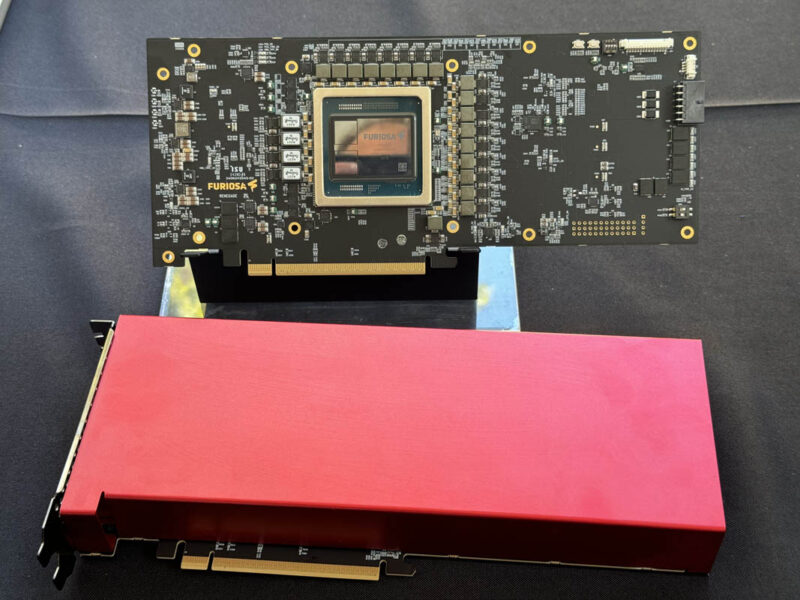

Here’s a look at the card with its cooler.

The target TDP for air-cooled data centers is only 150 W.

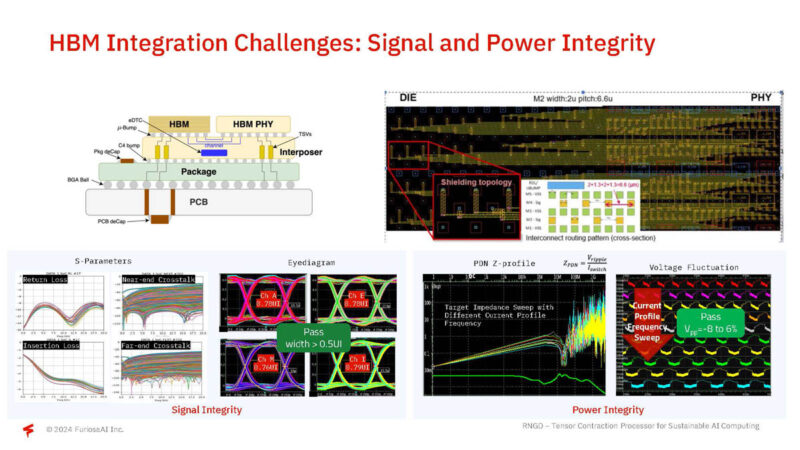

The structure is made using 12-layer HBM3 and TSMC CoWoS-S and a 5 nm process.

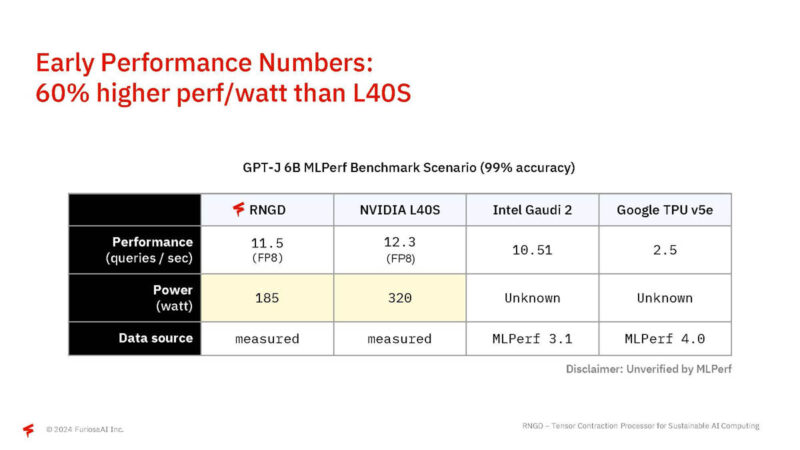

Instead of focusing on the H100 or B100, FuriosaAI is targeting the NVIDIA L40S. We wrote a big article about the L40S a while back. The goal is not only to offer similar performance, but also to deliver that performance while consuming less power.

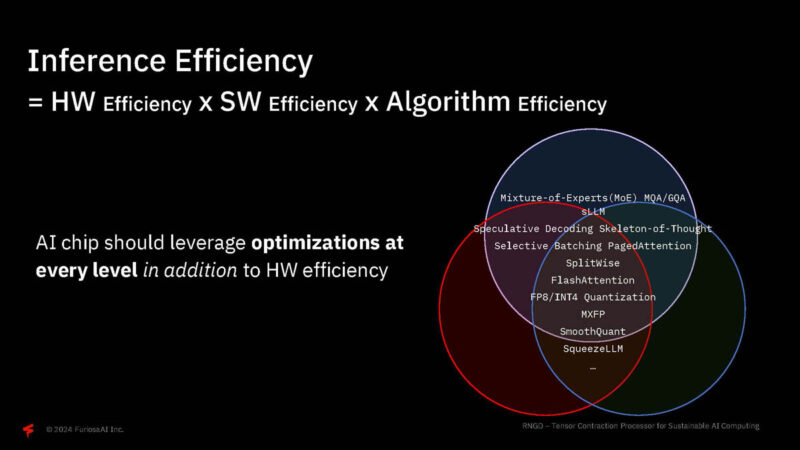

Efficiency comes from hardware, software and algorithm.

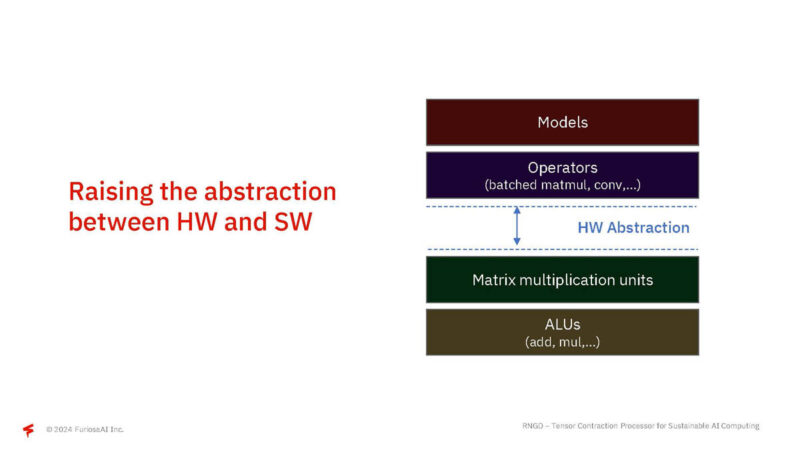

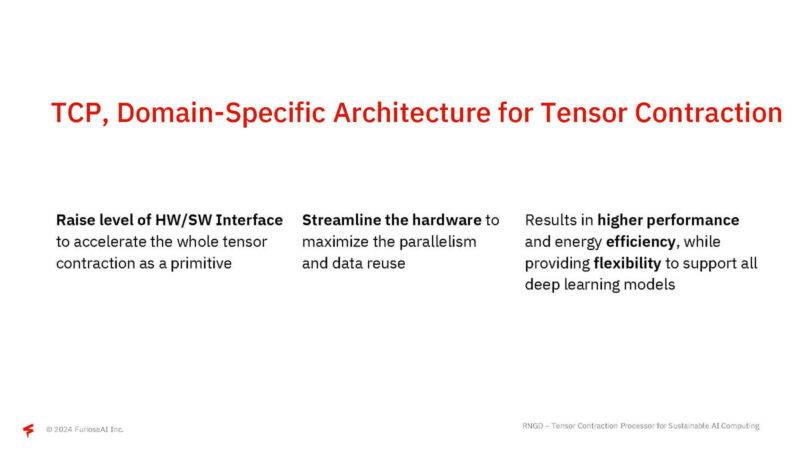

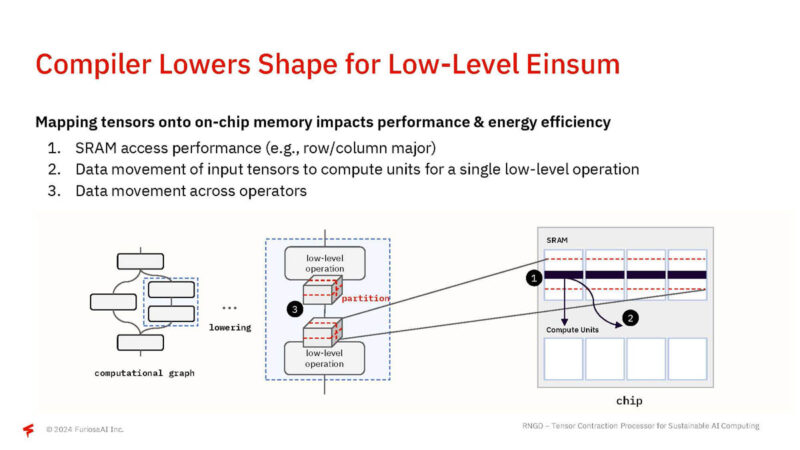

One of the challenges for FuriosaAI was to work on the abstraction layer between hardware and software.

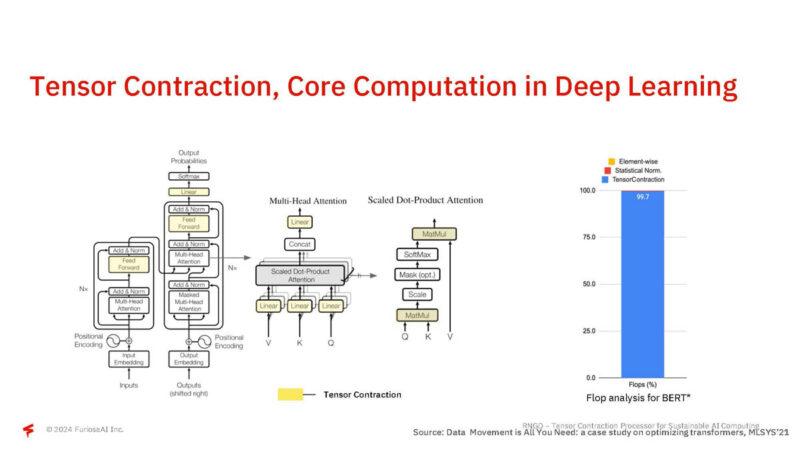

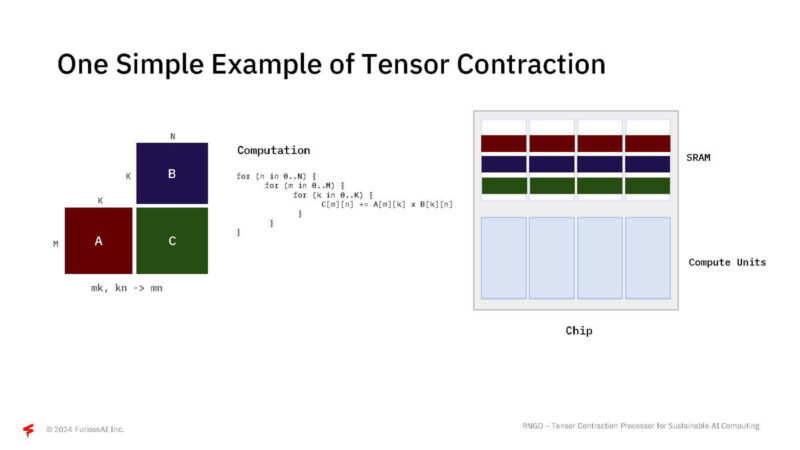

Tensor contraction is one of FuriosaAI’s big operations. In BERT, it accounted for over 99% of FLOPS.

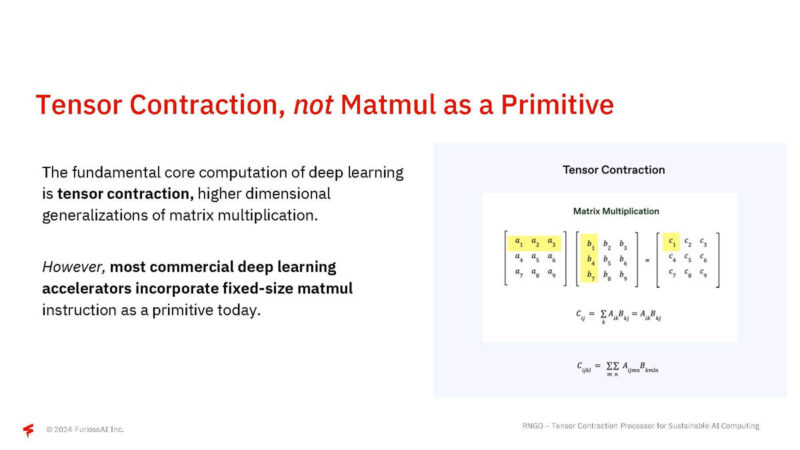

Normally we have matrix multiplication as a primitive instead of tensor contraction.

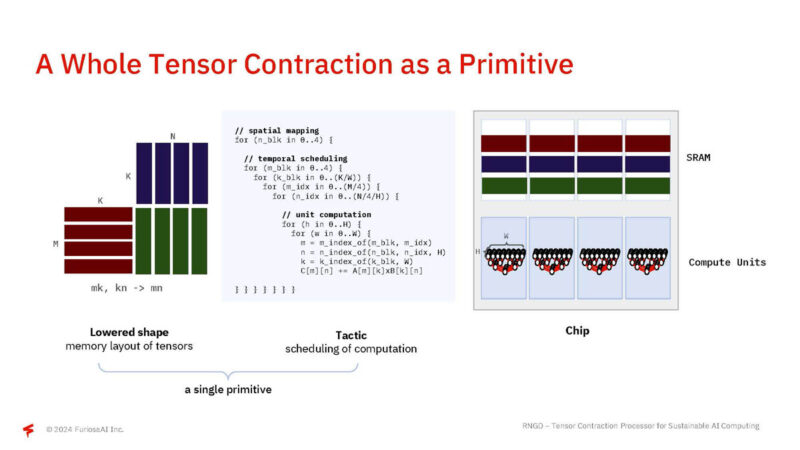

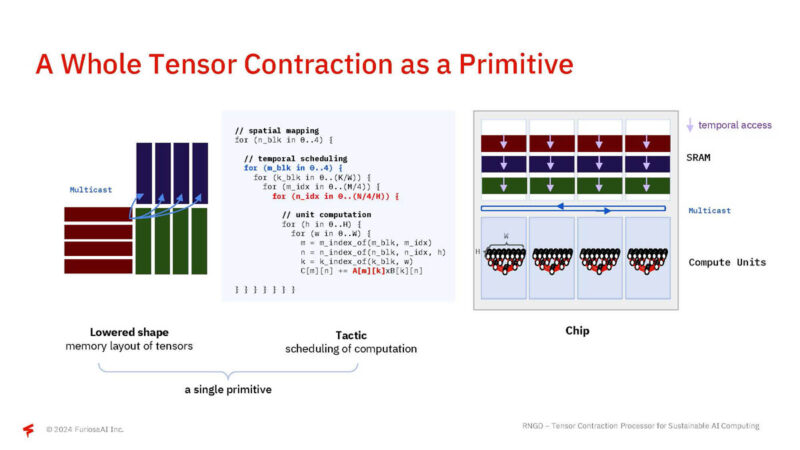

Instead, the abstraction occurs at the level of tensor contraction.

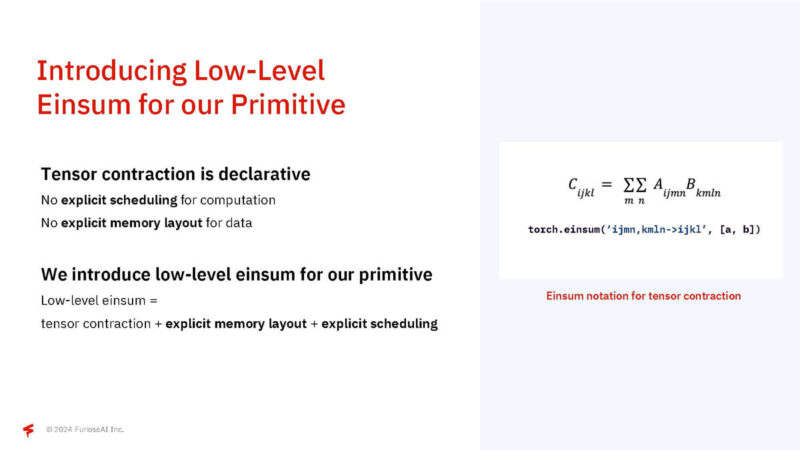

Furiosa adds a low-level summation for his primitive.

Here the matrices A and B are multiplied to produce C.

Furiosa then takes this over and plans it on the actual architecture with storage and computing units.

From here, an entire tensor contraction can be a primitive.

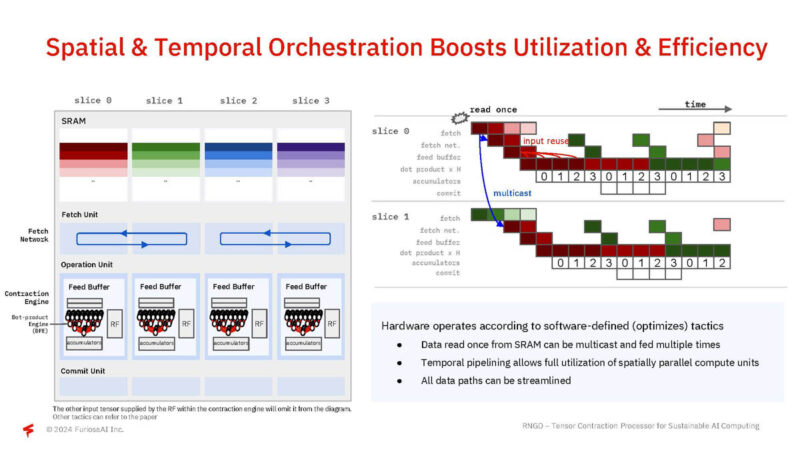

By taking spatial and temporal orchestration into account, they can increase efficiency and utilization.

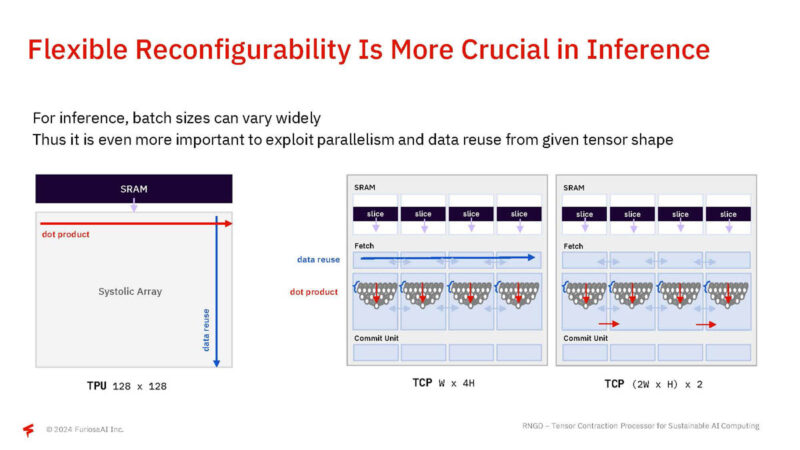

Furiosa says it has flexible reconfiguration, which is important to keep performance high with varying batch sizes.

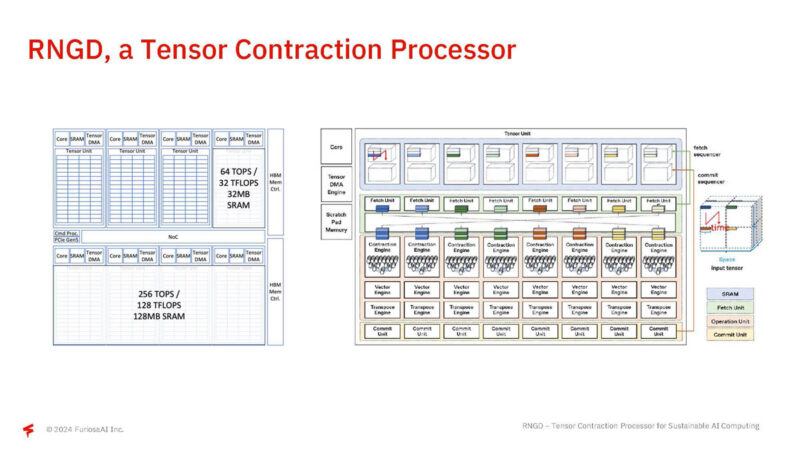

Here is a look at the RNGD implementation.

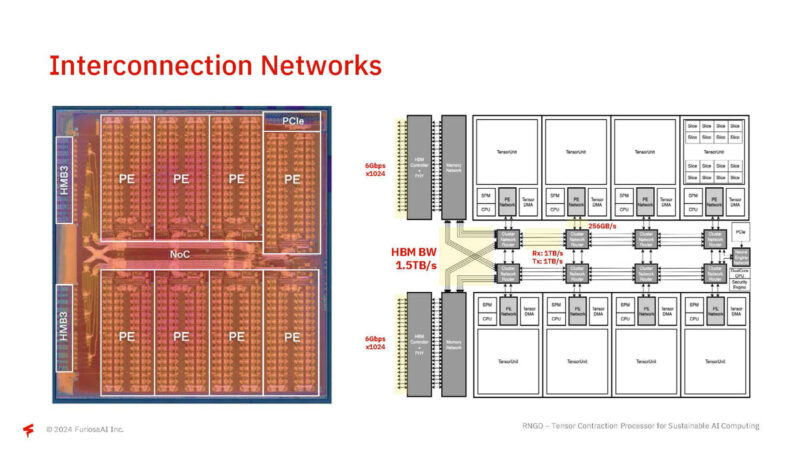

Here are the connection networks, also for accessing the RAM.

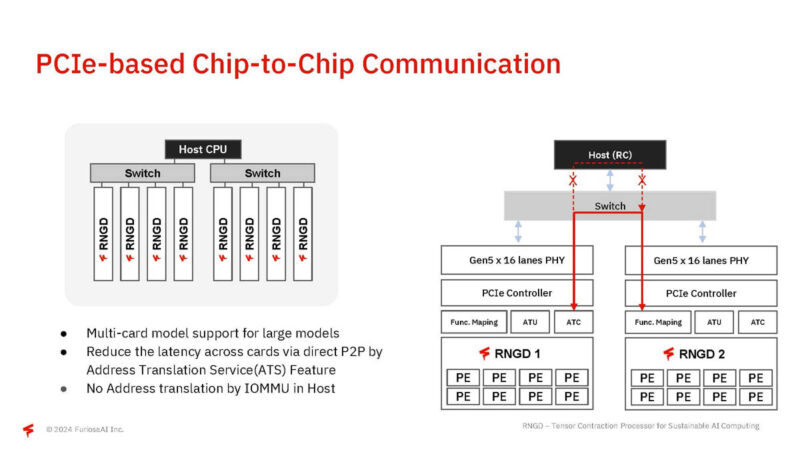

Furiosa uses PCIe Gen5 xq6 for chip-to-chip communication. It also uses P2P via a PCIe switch for direct GPU-to-GPU communication, so if XConn gets it right, this is a fantastic product.

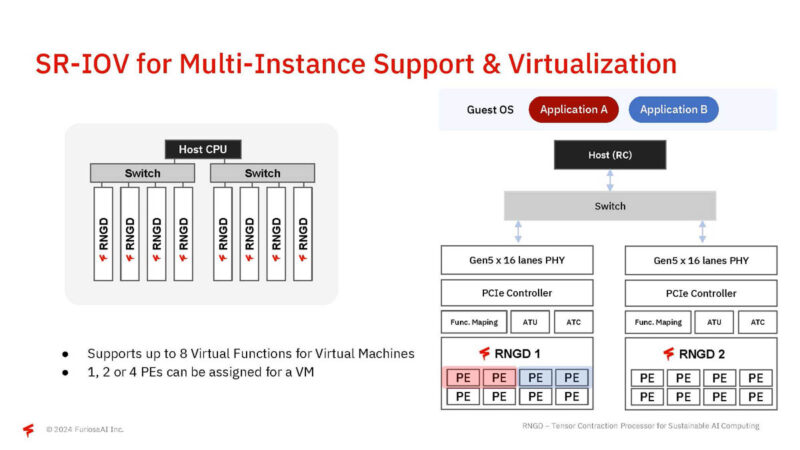

Furiosa supports SR-IOV for virtualization.

The company has worked on signal and power integrity to ensure reliability.

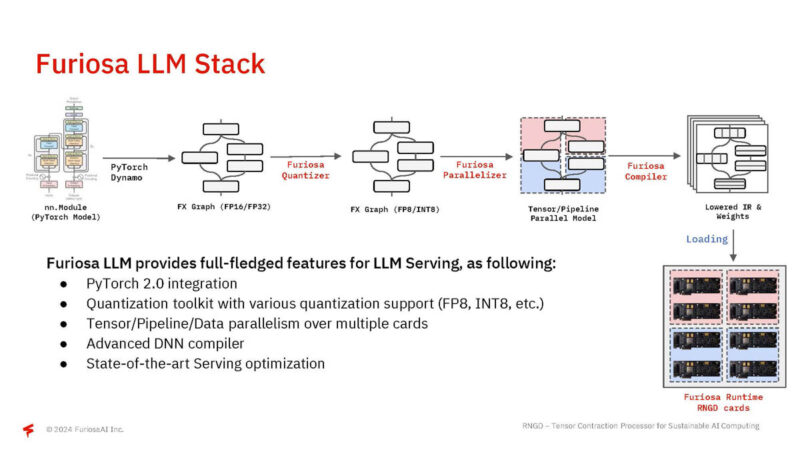

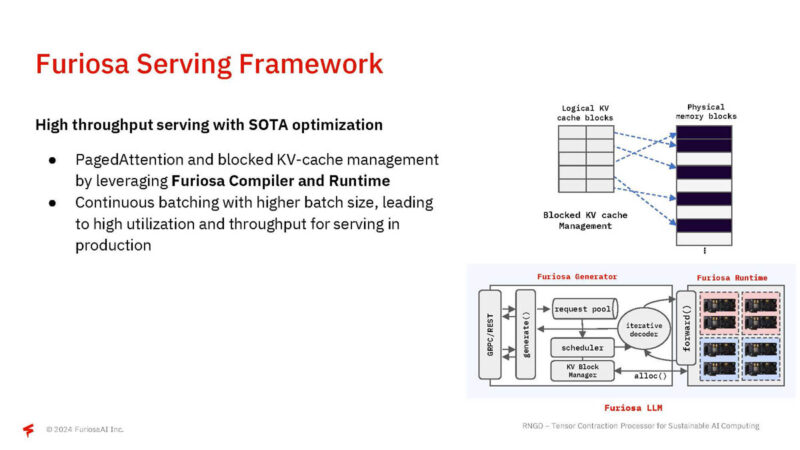

This is how Furiosa LLM works in the form of a flow chart.

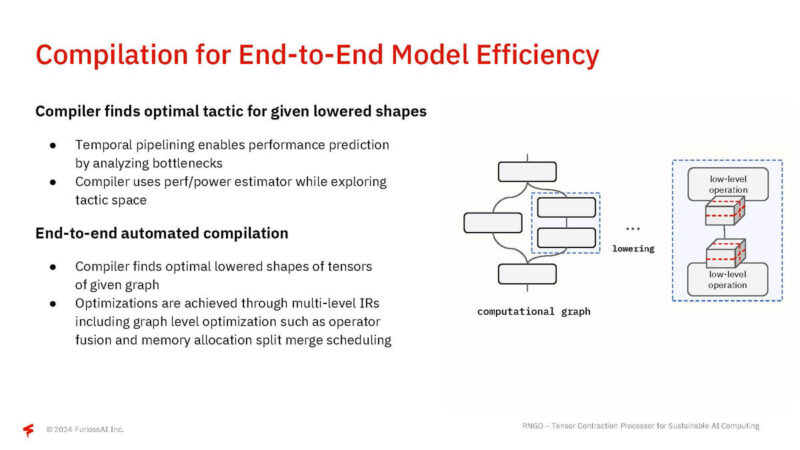

The compiler compiles each partition that is assigned to multiple devices.

The compiler optimizes the model for performance improvements and energy efficiency.

For example, the serving framework performs continuous batching to achieve better utilization.

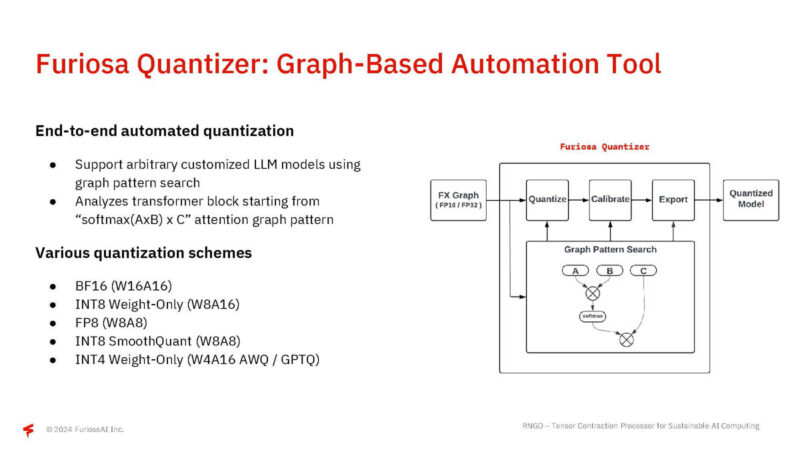

The company has a graph-based automation tool to support quantization. Furiosa supports a number of different formats, including FP8 and INT4.

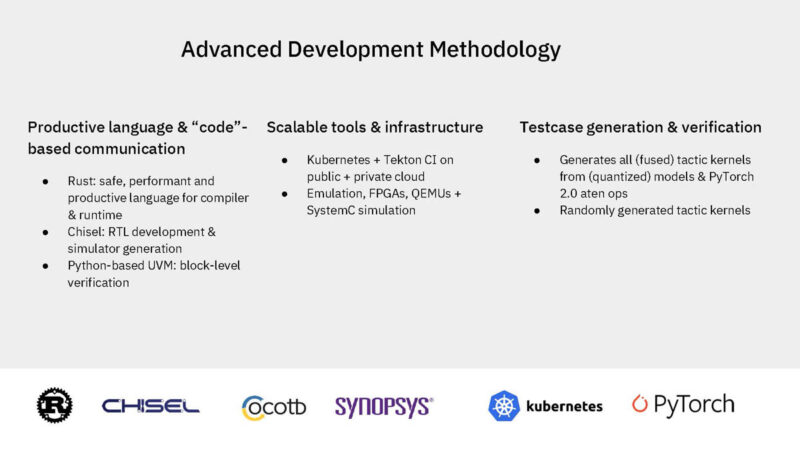

Here is the company’s development methodology.

Closing words

There was a lot to say here. The short summary is that the company is using its compilers and software to map AI inference into its lower-power SoC to provide lower-power AI inference.