Short: Companies working in the field of generative AI keep making outrageous promises about unprecedented productivity gains and cost reductions. Meta is now focusing on 3D model creation, which can be achieved seemingly effortlessly and with very little input data thanks to a novel machine learning algorithm.

Researchers from Meta and the University of Oxford collaborated on VFusion3D, a new method for developing scalable generative algorithms with a focus on 3D models. The technology was conceived as a way to overcome the main problem of basic 3D generative models: the fact that there is not enough 3D data to initially train these new models.

Images, text or videos are plentiful, the researchers explain, and can be used to train “traditional” generative AI algorithms. However, with 3D models, specific assets are not so readily available. “This leads to a significant difference in size compared to the huge amounts of other data types,” the study says.

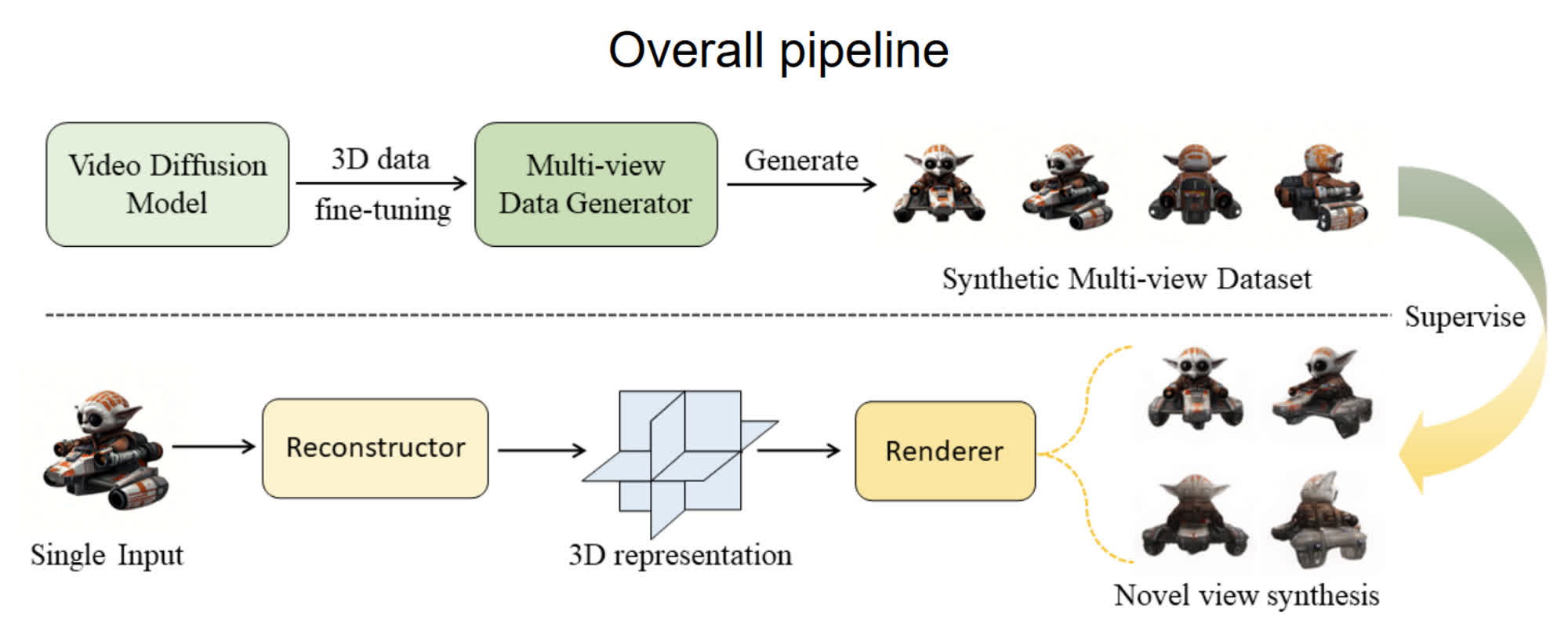

VFusion3D can overcome this problem by using a video diffusion model as a source of 3D data, trained on extensive amounts of text, images and even videos. The new method can “unlock” its generative capabilities for multiple views thanks to algorithmic fine-tuning and can also be used to generate a new large-scale synthetic dataset to feed new generative 3D models in the future.

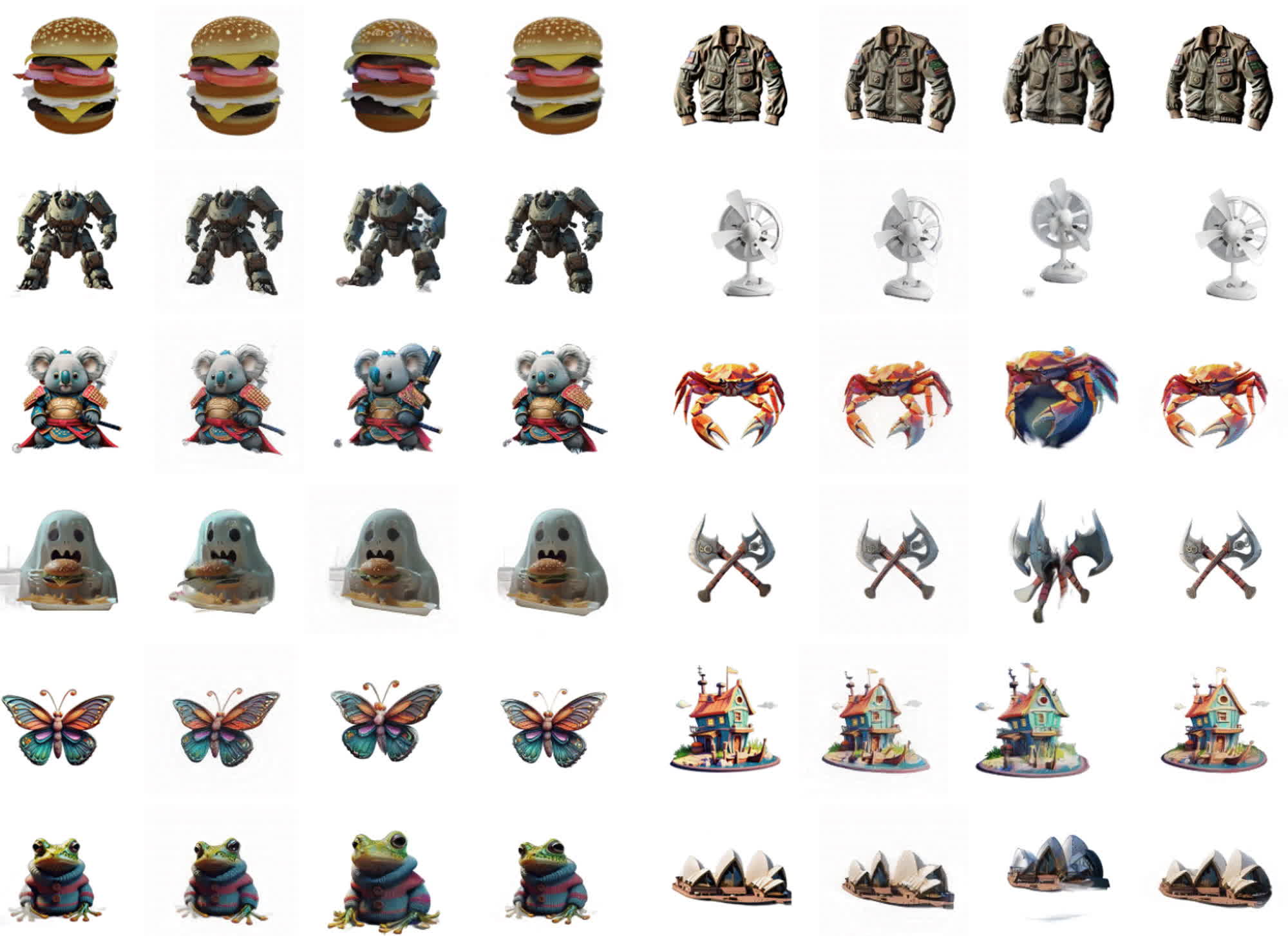

The VFusion3D base model was trained on nearly 3 million “synthetic multi-view data,” say the researchers, and is now able to generate a new 3D asset from a single (2D, we assume) image in just a few seconds. VFusion3D can apparently offer a higher level of performance compared to other 3D generative models, and users apparently prefer its results over 90 percent of the time.

The official project page describes the pipeline used to develop VFusion3D. The researchers first used a limited amount of 3D data to optimize a video diffusion model, then turned that model into a multi-view video generator that acted as a “data engine.” The engine was used to generate large amounts of oddly synthetic assets that were eventually used to train VFusion3D as a suitable generative AI algorithm.

VFusion3D can improve the quality of the 3D assets it generates if it uses a larger dataset for training, the researchers say. By using “stronger” video diffusion models and more 3D assets, the algorithm can evolve even further. The end goal is to give companies in the entertainment business a much easier way to create 3D graphics, though we hope there won’t be any underpaid, uncredited human workers hiding behind the sinister curtains of generative AI this time around.